State of AI Tools 2025: Proprietary Data and Token Pricing Trends ChatGPT Gemini Claude compared Piricing Token 2025

Introduction

The State of AI Tools 2025 reveals a dramatic shift in the economics of large language models. Fierce competition among OpenAI, Google DeepMind, Anthropic, xAI and other providers has driven token pricing down by up to 99% since early 2023. This price revolution makes powerful AI accessible for everyone-from first-time users to seasoned developers-unlocking new possibilities in automation, research, and creative applications.

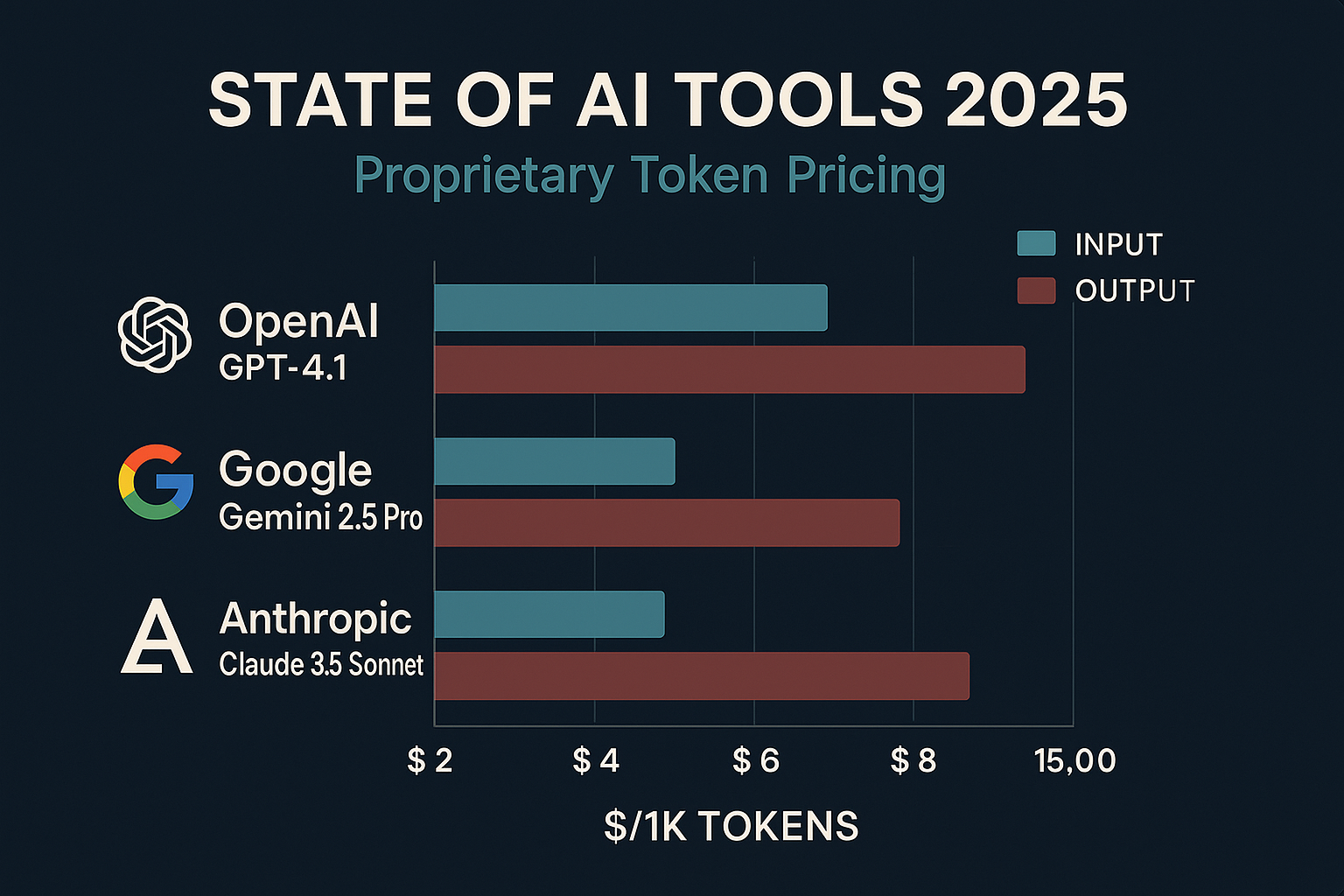

OpenAI GPT Series

OpenAI continues to lead with rapid cost reductions across its GPT family:

GPT-4.1:

Input: $2.00 per 1 M tokens

Output: $8.00 per 1 M tokens

GPT-4.1 mini:

Input: $0.40 per 1 M tokens

Output: $1.60 per 1 M tokens

GPT-4.1 nano:

Input: $0.10 per 1 M tokens

Output: $0.40 per 1 M tokens

Since its March 2023 debut at $30/$60 (input/output), total output costs have fallen by over 80%, while input costs are down more than 90%. OpenAI's Batch API offers asynchronous jobs at roughly 50 % off standard rates, making high-volume use cases even more economical.

Google Gemini & Vertex AI

Google's DeepMind-powered Gemini series has matched these aggressive cuts:

Gemini 2.5 Pro (≤ 200 K context):

Input: $1.25 per 1 M tokens

Output: $10.00 per 1 M tokens

Gemini 2.5 Flash (≤ 65 K context):

Input: $0.30 per 1 M tokens

Output: $2.50 per 1 M tokens

Gemini 2.5 Flash Lite (≤ 32 K context):

Input: $0.075 per 1 M tokens

Output: $0.30 per 1 M tokens

An October 2024 update cut input prices by 64 % and output by 52 % for Gemini 1.5 Pro, setting the stage for today's highly competitive rates. Google's batch pricing likewise hovers at a 50 % discount.

Anthropic Claude Family

Anthropic delivers a tiered approach with flagship performance at a premium:

Claude 4 Opus: $15 / $75 per 1 M tokens (input/output)

Claude 3.5 Sonnet: $3 / $15 per 1 M tokens

Claude 3.5 Haiku: $0.80 / $4.00 per 1 M tokens

Batch processing consistently offers half-price rates across all Claude models, making the smallest variants ideal for cost-sensitive applications.

Other Notable Providers

xAI Grok-3: $3 / $15 per 1 M tokens; Grok-3 mini at $0.30 / $0.50

Meta Llama 3 Pro (Azure): ≈$2 / $10 per 1 M tokens

Open-source models: Zero per-token fees (infrastructure costs only)

These alternatives round out a diverse ecosystem, where specialized and niche models compete on both performance and price.

Key Takeaways

Token prices have plunged 80 - 99 % since 2023, democratizing access to premium AI.

Output tokens cost 4 - 8× more than input tokens, reflecting higher computational demand.

Context windows exceed 1 M tokens in flagship models, often at standard pricing tiers.

Batch and async APIs generally deliver a 50 % discount, ideal for high-volume workloads.

Ongoing price competition means rates can change rapidly-bookmark provider pages to stay current.

Future Outlook

Continued price wars will drive sub-cent token costs for "mini" and "lite" models.

Bundled subscriptions, volume tiers, and enterprise discounts will proliferate.

Broader adoption of multi-modal and extended-context models at mass-market prices.

A possible resurgence of open-source self-hosting as costs approach zero.